By Alex Zarifis and the TrustUpdate.com team

The capabilities of Artificial Intelligence are increasing dramatically, and it is disrupting insurance and healthcare. In insurance AI is used to detect fraudulent claims and natural language processing is used by chatbots to interact with the consumer. In healthcare it is used to make a diagnosis and plan what the treatment should be. The consumer is benefiting from customized health insurance offers and real-time adaptation of fees. Currently the interface between the consumer purchasing health insurance and AI raises some barriers such as insufficient trust and privacy concerns.

Consumers are not passive to the increasing role of AI. Many consumers have beliefs on what this technology should do. Furthermore, regulation is moving toward making it necessary for the use of AI to be explicitly revealed to the consumer (European Commission 2019). Therefore, the consumer is an important stakeholder and their perspective should be understood and incorporated into future AI solutions in health insurance.

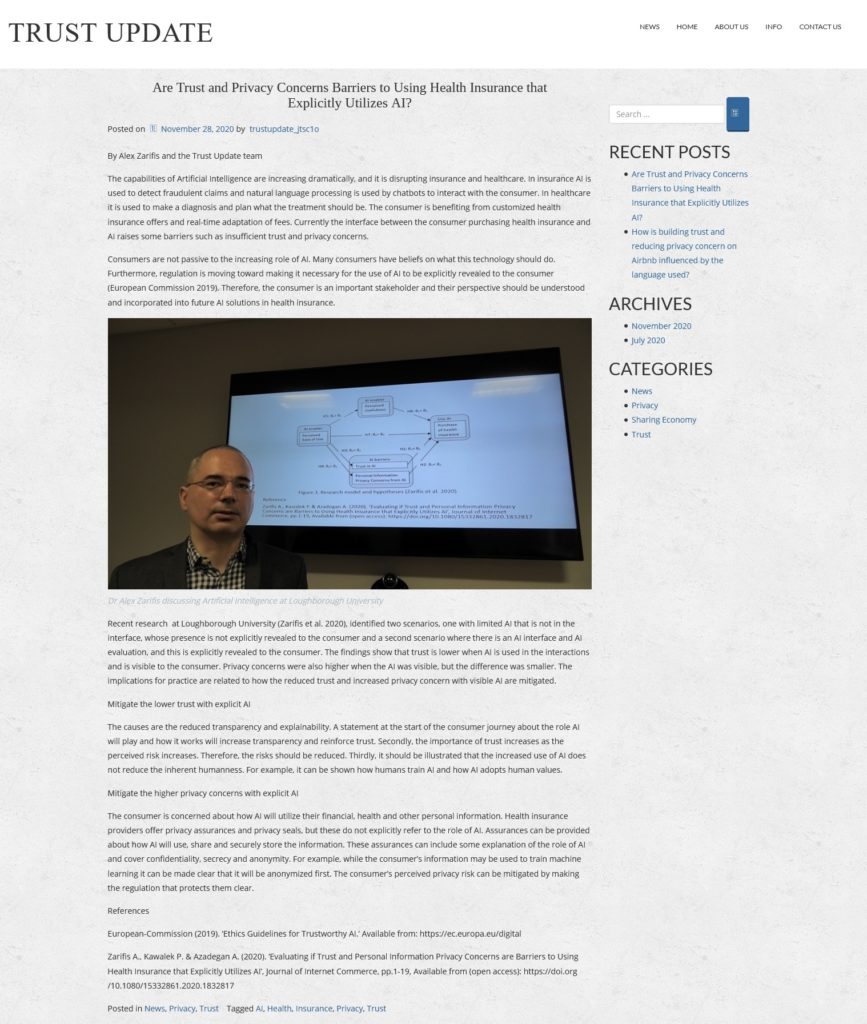

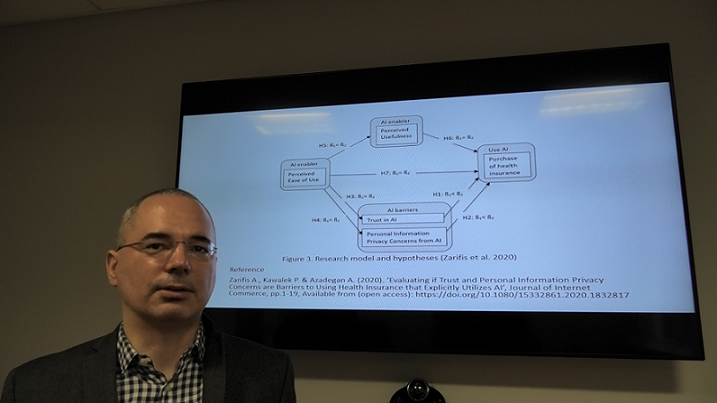

Recent research at Loughborough University (Zarifis et al. 2020), identified two scenarios, one with limited AI that is not in the interface, whose presence is not explicitly revealed to the consumer and a second scenario where there is an AI interface and AI evaluation, and this is explicitly revealed to the consumer. The findings show that trust is lower when AI is used in the interactions and is visible to the consumer. Privacy concerns were also higher when the AI was visible, but the difference was smaller. The implications for practice are related to how the reduced trust and increased privacy concern with visible AI are mitigated.

Mitigate the lower trust with explicit AI

The causes are the reduced transparency and explainability. A statement at the start of the consumer journey about the role AI will play and how it works will increase transparency and reinforce trust. Secondly, the importance of trust increases as the perceived risk increases. Therefore, the risks should be reduced. Thirdly, it should be illustrated that the increased use of AI does not reduce the inherent humanness. For example, it can be shown how humans train AI and how AI adopts human values.

Mitigate the higher privacy concerns with explicit AI

The consumer is concerned about how AI will utilize their financial, health and other personal information. Health insurance providers offer privacy assurances and privacy seals, but these do not explicitly refer to the role of AI. Assurances can be provided about how AI will use, share and securely store the information. These assurances can include some explanation of the role of AI and cover confidentiality, secrecy and anonymity. For example, while the consumer’s information may be used to train machine learning it can be made clear that it will be anonymized first. The consumer’s perceived privacy risk can be mitigated by making the regulation that protects them clear.

References

European-Commission (2019). ‘Ethics Guidelines for Trustworthy AI.’ Available from: https://ec.europa.eu/digital

Zarifis A., Kawalek P. & Azadegan A. (2020). ‘Evaluating if Trust and Personal Information Privacy Concerns are Barriers to Using Health Insurance that Explicitly Utilizes AI’, Journal of Internet Commerce, pp.1-19. https://doi.org/10.1080/15332861.2020.1832817 (open access)

This article was first published on TrustUpdate.com: https://www.trustupdate.com/news/are-trust-and-privacy-concerns-barriers-to-using-health-insurance-that-explicitly-utilizes-ai/